二进制安装1.22

1 网络规划、基本安装

| 接口名称 | 描述 | 安装组件 |

|---|---|---|

| 192.168.8.231 | k8s2-master01 | |

| 192.168.8.232 | k8s2-master02 | |

| 192.168.8.233 | k8s2-master03 | |

| 192.168.8.234 | k8s2-node01 | |

| 192.168.8.235 | k8s2-node02 | |

| 192.168.8.236 | k8s2-lb01 |

systemctl daemon-reload

更改配置文件,重新加载

1.1 网段

K8s Service网段:10.10.0.0/16

K8s Pod网段:172.16.0.0/12

K8s 宿主机网段:192.168.0.0/12

提示:宿主机网段、K8s Service网段、Pod网段不能重复,

1.2 系统基础配置

1 系统环境:

[root@k8s2-master01 ~]# cat /etc/redhat-release

CentOS Linux release 7.9.2009 (Core)

2 配置所有节点hosts文件

[root@k8s2-master01 ~]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.8.231 k8s2-master01

192.168.8.232 k8s2-master02

192.168.8.233 k8s2-master03

192.168.8.234 k8s2-node01

192.168.8.235 k8s2-node02

192.168.8.236 k8s2-lb01

3 CentOS 7安装yum源如下:

curl -o /etc/yum.repos.d/CentOS-Base.repo https://mirrors.aliyun.com/repo/Centos-7.repo

yum install -y yum-utils device-mapper-persistent-data lvm2

yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

sed -i -e '/mirrors.cloud.aliyuncs.com/d' -e '/mirrors.aliyuncs.com/d' /etc/yum.repos.d/CentOS-Base.repo

4 CentOS 7安装yum源如下:

curl -o /etc/yum.repos.d/CentOS-Base.repo https://mirrors.aliyun.com/repo/Centos-7.repo

yum install -y yum-utils device-mapper-persistent-data lvm2

yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

sed -i -e '/mirrors.cloud.aliyuncs.com/d' -e '/mirrors.aliyuncs.com/d' /etc/yum.repos.d/CentOS-Base.repo

5 必备工具安装

yum install wget jq psmisc vim net-tools telnet yum-utils device-mapper-persistent-data lvm2 git -y

6 所有节点关闭firewalld 、dnsmasq、selinux(CentOS7需要关闭NetworkManager,CentOS8不需要)

systemctl disable --now firewalld

systemctl disable --now dnsmasq

systemctl disable --now NetworkManager

setenforce 0

sed -i 's#SELINUX=enforcing#SELINUX=disabled#g' /etc/sysconfig/selinux

sed -i 's#SELINUX=enforcing#SELINUX=disabled#g' /etc/selinux/config

7 所有节点关闭swap分区,fstab注释swap

swapoff -a && sysctl -w vm.swappiness=0

sed -ri '/^[^#]*swap/s@^@#@' /etc/fstab

8 所有节点同步时间 安装ntpdate

rpm -ivh http://mirrors.wlnmp.com/centos/wlnmp-release-centos.noarch.rpm

yum install ntpdate -y

9 所有节点同步时间。时间同步配置如下

ln -sf /usr/share/zoneinfo/Asia/Shanghai /etc/localtime

echo 'Asia/Shanghai' >/etc/timezone

ntpdate time2.aliyun.com

# 加入到crontab

*/5 * * * * /usr/sbin/ntpdate time2.aliyun.com

10 所有节点配置limit:

ulimit -SHn 65535

vim /etc/security/limits.conf

# 末尾添加如下内容

* soft nofile 65536

* hard nofile 131072

* soft nproc 65535

* hard nproc 655350

* soft memlock unlimited

* hard memlock unlimited

11 master节点做免密

Master01节点免密钥登录其他节点,安装过程中生成配置文件和证书均在Master01上操作,集群管理也在Master01上操作

[root@k8s2-master01 ~]# ssh-keygen -t rsa

Generating public/private rsa key pair.

Enter file in which to save the key (/root/.ssh/id_rsa):

/root/.ssh/id_rsa already exists.

Overwrite (y/n)? y

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /root/.ssh/id_rsa.

Your public key has been saved in /root/.ssh/id_rsa.pub.

The key fingerprint is:

SHA256:LXlBnWUiexjZPTWqN1FWQSnjPvPwJ6MI5aCJXaQd1q4 root@k8s2-master01

The key's randomart image is:

+---[RSA 2048]----+

| ++.++B*|

| o.=+=*..|

| + = ooo. |

| = = o... |

| . S =..o |

| o + B .=. |

| . + E . * |

| . . oo.|

| . .. o.|

+----[SHA256]-----+

12 Master01配置免密码登录其他节点

for i in k8s2-master01 k8s2-master02 k8s2-master03 k8s2-node01 k8s2-node02 ;do ssh-copy-id -i .ssh/id_rsa.pub $i;done

13 所有节点安装基本工具

yum install wget jq psmisc vim net-tools yum-utils device-mapper-persistent-data lvm2 git -y

14 Master01下载安装文件

提示: 这里使用杜宽大佬的脚本

https://gitee.com/dukuan/k8s-ha-install

下载1.22 版本

15 所有节点升级系统并重启

yum update -y

16 内核升级

CentOS7 需要升级内核至4.18+,本次升级的版本为4.19

[root@k8s2-master01 ~]# ls -rlht

total 58M

-rw-r--r-- 1 root root 13M Dec 22 2018 kernel-ml-devel-4.19.12-1.el7.elrepo.x86_64.rpm

-rw-r--r-- 1 root root 46M Dec 22 2018 kernel-ml-4.19.12-1.el7.elrepo.x86_64.rpm

-rw-------. 1 root root 1.4K Jan 2 21:09 anaconda-ks.cfg

-rw-r--r-- 1 root root 63K Mar 12 10:06 k8s-ha-install-manual-installation-v1.22.x.zip

从master01节点传到其他节点:

for i in k8s2-master02 k8s2-master03 k8s2-node01

k8s2-node02

;do scp kernel-ml-4.19.12-1.el7.elrepo.x86_64.rpm kernel-ml-devel-4.19.12-1.el7.elrepo.x86_64.rpm $i:/root/ ; done

所有节点安装内核

cd /root && yum localinstall -y kernel-ml*

所有节点更改内核启动顺序

grub2-set-default 0 && grub2-mkconfig -o /etc/grub2.cfg

grubby --args="user_namespace.enable=1" --update-kernel="$(grubby --default-kernel)"

检查默认内核是不是4.19

[root@k8s2-master02 ~]# grubby --default-kernel

/boot/vmlinuz-4.19.12-1.el7.elrepo.x86_64

所有节点重启,然后检查内核是不是4.19

[root@k8s2-master02 ~]# uname -a

Linux k8s2-master02 4.19.12-1.el7.elrepo.x86_64 #1 SMP Fri Dec 21 11:06:36 EST 2018 x86_64 x86_64 x86_64 GNU/Linux

17 所有节点安装ipvsadm

提示:ipvs 比iptable 效率比较高,推荐ipvs

yum install ipvsadm ipset sysstat conntrack libseccomp -y

K8S中iptables和ipvs区别

从k8s的1.8版本开始,kube-proxy引入了IPVS模式,IPVS模式与iptables同样基于Netfilter,但是ipvs采用的hash表,iptables采用一条条的规则列表。iptables又是为了防火墙设计的,集群数量越多iptables规则就越多,而iptables规则是从上到下匹配,所以效率就越是低下。因此当service数量达到一定规模时,hash查表的速度优势就会显现出来,从而提高service的服务性能

每个节点的kube-proxy负责监听API server中service和endpoint的变化情况。将变化信息写入本地userspace、iptables、ipvs来实现service负载均衡,使用NAT将vip流量转至endpoint中。由于userspace模式因为可靠性和性能(频繁切换内核/用户空间)早已经淘汰,所有的客户端请求svc,先经过iptables,然后再经过kube-proxy到pod,所以性能很差。

ipvs和iptables都是基于netfilter的,两者差别如下:

- ipvs 为大型集群提供了更好的可扩展性和性能

- ipvs 支持比 iptables 更复杂的负载均衡算法(最小负载、最少连接、加权等等)

- ipvs 支持服务器健康检查和连接重试等功能

18 所有节点配置ipvs模块,在内核4.19+版本nf_conntrack_ipv4已经改为nf_conntrack, 4.18以下使用nf_conntrack_ipv4即可:

vim /etc/modules-load.d/ipvs.conf

# 加入以下内容

ip_vs

ip_vs_lc

ip_vs_wlc

ip_vs_rr

ip_vs_wrr

ip_vs_lblc

ip_vs_lblcr

ip_vs_dh

ip_vs_sh

ip_vs_fo

ip_vs_nq

ip_vs_sed

ip_vs_ftp

ip_vs_sh

nf_conntrack

ip_tables

ip_set

xt_set

ipt_set

ipt_rpfilter

ipt_REJECT

ipip

然后执行systemctl enable --now systemd-modules-load.service即可

检查是否加载:

lsmod | grep -e ip_vs -e nf_conntrack

[root@k8s2-master01 ~]# lsmod | grep -e ip_vs -e nf_conntrack

nf_conntrack_netlink 40960 0

nfnetlink 16384 3 nf_conntrack_netlink,ip_set

ip_vs_ftp 16384 0

nf_nat 32768 2 nf_nat_ipv4,ip_vs_ftp

ip_vs_sed 16384 0

ip_vs_nq 16384 0

ip_vs_fo 16384 0

ip_vs_sh 16384 0

ip_vs_dh 16384 0

ip_vs_lblcr 16384 0

ip_vs_lblc 16384 0

ip_vs_wrr 16384 0

ip_vs_rr 16384 0

ip_vs_wlc 16384 0

ip_vs_lc 16384 0

ip_vs 151552 24 ip_vs_wlc,ip_vs_rr,ip_vs_dh,ip_vs_lblcr,ip_vs_sh,ip_vs_fo,ip_vs_nq,ip_vs_lblc,ip_vs_wrr,ip_vs_lc,ip_vs_sed,ip_vs_ftp

nf_conntrack 143360 6 xt_conntrack,nf_nat,ipt_MASQUERADE,nf_nat_ipv4,nf_conntrack_netlink,ip_vs

nf_defrag_ipv6 20480 1 nf_conntrack

nf_defrag_ipv4 16384 1 nf_conntrack

libcrc32c 16384 4 nf_conntrack,nf_nat,xfs,ip_vs

19 开启一些k8s集群中必须的内核参数,所有节点配置k8s内核

cat <<EOF > /etc/sysctl.d/k8s.conf

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

fs.may_detach_mounts = 1

vm.overcommit_memory=1

net.ipv4.conf.all.route_localnet = 1

vm.panic_on_oom=0

fs.inotify.max_user_watches=89100

fs.file-max=52706963

fs.nr_open=52706963

net.netfilter.nf_conntrack_max=2310720

net.ipv4.tcp_keepalive_time = 600

net.ipv4.tcp_keepalive_probes = 3

net.ipv4.tcp_keepalive_intvl =15

net.ipv4.tcp_max_tw_buckets = 36000

net.ipv4.tcp_tw_reuse = 1

net.ipv4.tcp_max_orphans = 327680

net.ipv4.tcp_orphan_retries = 3

net.ipv4.tcp_syncookies = 1

net.ipv4.tcp_max_syn_backlog = 16384

net.ipv4.ip_conntrack_max = 65536

net.ipv4.tcp_max_syn_backlog = 16384

net.ipv4.tcp_timestamps = 0

net.core.somaxconn = 16384

EOF

sysctl --system

所有节点配置完内核后,重启服务器,保证重启后内核依旧加载

lsmod | grep --color=auto -e ip_vs -e nf_conntrack

2 基本组件安装

本节主要安装的是集群中用到的各种组件,比如Docker-ce、Kubernetes各组件等

2.1 docker 安装

所有节点安装Docker-ce 19.03

yum install docker-ce-19.03.* docker-ce-cli-19.03.* -y

温馨提示:

由于新版kubelet建议使用systemd,所以可以把docker的CgroupDriver改成systemd

2.2 K8s及etcd安装

Master01下载kubernetes安装包(1.22.0需要更改为你看到的最新版本)

[root@k8s2-master01 ~]# wget https://dl.k8s.io/v1.22.0/kubernetes-server-linux-amd64.tar.gz

1.22.0 安装时需要下载最新的1.22.x版本:https://github.com/kubernetes/kubernetes/blob/master/CHANGELOG/CHANGELOG-1.22.md

以下操作都在master01执行

下载etcd安装包

[root@k8s2-master01 ~]# wget https://github.com/etcd-io/etcd/releases/download/v3.5.0/etcd-v3.5.0-linux-amd64.tar.gz

解压kubernetes安装文件

[root@k8s2-master01 ~]# tar -xf kubernetes-server-linux-amd64.tar.gz --strip-components=3 -C /usr/local/bin kubernetes/server/bin/kube{let,ctl,-apiserver,-controller-manager,-scheduler,-proxy}

解压etcd安装文件

[root@k8s2-master01 ~]# tar -zxvf etcd-v3.5.0-linux-amd64.tar.gz --strip-components=1 -C /usr/local/bin etcd-v3.5.0-linux-amd64/etcd{,ctl}

版本查看

[root@k8s2-master01 ~]# kubelet --version

Kubernetes v1.22.0

[root@k8s2-master01 ~]# etcdctl version

etcdctl version: 3.5.0

API version: 3.5

将组件发送到其他节点

MasterNodes='k8s2-master02 k8s2-master03'

WorkNodes='k8s2-node01 k8s2-node02'

for NODE in $MasterNodes; do echo $NODE; scp /usr/local/bin/kube{let,ctl,-apiserver,-controller-manager,-scheduler,-proxy} $NODE:/usr/local/bin/; scp /usr/local/bin/etcd* $NODE:/usr/local/bin/; done

for NODE in $WorkNodes; do scp /usr/local/bin/kube{let,-proxy} $NODE:/usr/local/bin/ ; done

所有节点创建/opt/cni/bin目录

mkdir -p /opt/cni/bin

[root@k8s2-master01 ~]# unzip k8s-ha-install-manual-installation-v1.22.x.zip

解压

[root@k8s2-master01 ~]# unzip k8s-ha-install-manual-installation-v1.22.x.zip

2.3 生成证书

二进制安装最关键步骤,一步错误全盘皆输,一定要注意每个步骤都要是正确的

Master01下载生成证书工具

[root@k8s2-master01 ~]# pwd

/root

[root@k8s2-master01 ~]# mv cfssl_linux-amd64 /usr/local/bin/cfssl

[root@k8s2-master01 ~]# mv cfssljson_linux-amd64 /usr/local/bin/cfssljson

[root@k8s2-master01 ~]# chmod +x /usr/local/bin/cfssl /usr/local/bin/cfssljson

2.3.1 etcd证书

1 所有Master节点创建etcd证书目录

提示:如果生产集群多,可以选择将etcd 独立部署

mkdir /etc/etcd/ssl -p

2 所有节点创建kubernetes相关目录

mkdir -p /etc/kubernetes/pki

3 Master01节点生成etcd证书

生成证书的CSR文件:证书签名请求文件,配置了一些域名、公司、单位

[root@k8s2-master01 ~]# cd /root/k8s-ha-install-manual-installation-v1.22.x/pki/

[root@k8s2-master01 pki]# ls

admin-csr.json ca-config.json etcd-ca-csr.json front-proxy-ca-csr.json kubelet-csr.json manager-csr.json

apiserver-csr.json ca-csr.json etcd-csr.json front-proxy-client-csr.json kube-proxy-csr.json scheduler-csr.json

[root@k8s2-master01 pki]# ls -rlht

total 48K

-rw-r--r-- 1 root root 248 Dec 11 20:47 scheduler-csr.json

-rw-r--r-- 1 root root 266 Dec 11 20:47 manager-csr.json

-rw-r--r-- 1 root root 240 Dec 11 20:47 kube-proxy-csr.json

-rw-r--r-- 1 root root 235 Dec 11 20:47 kubelet-csr.json

-rw-r--r-- 1 root root 87 Dec 11 20:47 front-proxy-client-csr.json

-rw-r--r-- 1 root root 118 Dec 11 20:47 front-proxy-ca-csr.json

-rw-r--r-- 1 root root 210 Dec 11 20:47 etcd-csr.json

-rw-r--r-- 1 root root 249 Dec 11 20:47 etcd-ca-csr.json

-rw-r--r-- 1 root root 265 Dec 11 20:47 ca-csr.json

-rw-r--r-- 1 root root 294 Dec 11 20:47 ca-config.json

-rw-r--r-- 1 root root 230 Dec 11 20:47 apiserver-csr.json

-rw-r--r-- 1 root root 225 Dec 11 20:47 admin-csr.json

4 生成etcd CA证书和CA证书的key

[root@k8s2-master01 pki]# cfssl gencert -initca etcd-ca-csr.json | cfssljson -bare /etc/etcd/ssl/etcd-ca

2023/03/12 11:46:21 [INFO] generating a new CA key and certificate from CSR

2023/03/12 11:46:21 [INFO] generate received request

2023/03/12 11:46:21 [INFO] received CSR

2023/03/12 11:46:21 [INFO] generating key: rsa-2048

2023/03/12 11:46:21 [INFO] encoded CSR

2023/03/12 11:46:21 [INFO] signed certificate with serial number 479187786078963789692369865687656814068933618010

[root@k8s2-master01 pki]# ls -rlht /etc/etcd/ssl/etcd-ca

ls: cannot access /etc/etcd/ssl/etcd-ca: No such file or directory

[root@k8s2-master01 pki]# ls -rlht /etc/etcd/ssl/etcd-ca

etcd-ca.csr etcd-ca-key.pem etcd-ca.pem

[root@k8s2-master01 pki]# ls -rlht /etc/etcd/ssl/

total 12K

-rw-r--r-- 1 root root 1.4K Mar 12 11:46 etcd-ca.pem

-rw------- 1 root root 1.7K Mar 12 11:46 etcd-ca-key.pem

-rw-r--r-- 1 root root 1005 Mar 12 11:46 etcd-ca.csr

5 在颁发客户端证书

提示:如果生产,建议可以预留一些IP给etcd 扩容使用,后期避免在创建证书

cfssl gencert \

-ca=/etc/etcd/ssl/etcd-ca.pem \

-ca-key=/etc/etcd/ssl/etcd-ca-key.pem \

-config=ca-config.json \

-hostname=127.0.0.1,k8s2-master01,k8s2-master02,k8s2-master03,192.168.8.231,192.168.8.232,192.168.8.233 \

-profile=kubernetes \

etcd-csr.json | cfssljson -bare /etc/etcd/ssl/etcd

6 将证书复制到其他节点

MasterNodes='k8s2-master02 k8s2-master03'

WorkNodes='k8s2-node01 k8s2-node02'

for NODE in $MasterNodes; do

ssh $NODE "mkdir -p /etc/etcd/ssl"

for FILE in etcd-ca-key.pem etcd-ca.pem etcd-key.pem etcd.pem; do

scp /etc/etcd/ssl/${FILE} $NODE:/etc/etcd/ssl/${FILE}

done

done

2.3.2 k8s组件证书

Master01生成kubernetes证书

[root@k8s2-master01 pki]# cd /root/k8s-ha-install-manual-installation-v1.22.x/pki/

[root@k8s2-master01 pki]# cfssl gencert -initca ca-csr.json | cfssljson -bare /etc/kubernetes/pki/ca

[root@k8s2-master01 pki]# cfssl gencert -initca ca-csr.json | cfssljson -bare /etc/kubernetes/pki/ca

2023/03/12 11:54:59 [INFO] generating a new CA key and certificate from CSR

2023/03/12 11:54:59 [INFO] generate received request

2023/03/12 11:54:59 [INFO] received CSR

2023/03/12 11:54:59 [INFO] generating key: rsa-2048

2023/03/12 11:55:00 [INFO] encoded CSR

2023/03/12 11:55:00 [INFO] signed certificate with serial number 88072748277238224767628960549021844411182591946

检查

[root@k8s2-master01 pki]# ls -rlht /etc/kubernetes/pki/

total 12K

-rw-r--r-- 1 root root 1.4K Mar 12 11:55 ca.pem

-rw------- 1 root root 1.7K Mar 12 11:55 ca-key.pem

-rw-r--r-- 1 root root 1.1K Mar 12 11:55 ca.csr

2 生成k8s apiserver 证书

- 10.10.0.1 是k8s service的网段,如果说需要更改k8s service网段,那就需要更改10.10.0.1 取第一个ip 就行

- 如果不是高可用集群,192.168.8.236为Master01的IP

- 可以多预留几个

cfssl gencert -ca=/etc/kubernetes/pki/ca.pem -ca-key=/etc/kubernetes/pki/ca-key.pem -config=ca-config.json -hostname=10.10.0.1,192.168.8.236,127.0.0.1,kubernetes,kubernetes.default,kubernetes.default.svc,kubernetes.default.svc.cluster,kubernetes.default.svc.cluster.local,192.168.8.231,192.168.8.232,192.168.8.233 -profile=kubernetes apiserver-csr.json | cfssljson -bare /etc/kubernetes/pki/apiserver

查看

[root@k8s2-master01 pki]# ls -rlht /etc/kubernetes/pki/

total 24K

-rw-r--r-- 1 root root 1.4K Mar 12 11:55 ca.pem

-rw------- 1 root root 1.7K Mar 12 11:55 ca-key.pem

-rw-r--r-- 1 root root 1.1K Mar 12 11:55 ca.csr

-rw-r--r-- 1 root root 1.7K Mar 12 12:02 apiserver.pem

-rw------- 1 root root 1.7K Mar 12 12:02 apiserver-key.pem

-rw-r--r-- 1 root root 1.1K Mar 12 12:02 apiserver.csr

3 生成apiserver的聚合证书。Requestheader-client-xxx requestheader-allowwd-xxx:aggerator

[root@k8s2-master01 pki]# cfssl gencert -initca front-proxy-ca-csr.json | cfssljson -bare /etc/kubernetes/pki/front-proxy-ca

[root@k8s2-master01 pki]# cfssl gencert -ca=/etc/kubernetes/pki/front-proxy-ca.pem -ca-key=/etc/kubernetes/pki/front-proxy-ca-key.pem -config=ca-config.json -profile=kubernetes front-proxy-client-csr.json | cfssljson -bare /etc/kubernetes/pki/front-proxy-client

返回结果(忽略警告)

[root@k8s2-master01 pki]# cfssl gencert -initca front-proxy-ca-csr.json | cfssljson -bare /etc/kubernetes/pki/front-proxy-ca

2023/03/12 12:07:10 [INFO] generating a new CA key and certificate from CSR

2023/03/12 12:07:10 [INFO] generate received request

2023/03/12 12:07:10 [INFO] received CSR

2023/03/12 12:07:10 [INFO] generating key: rsa-2048

2023/03/12 12:07:10 [INFO] encoded CSR

2023/03/12 12:07:10 [INFO] signed certificate with serial number 170700964419426872106160615090799836219206694940

[root@k8s2-master01 pki]# cfssl gencert -ca=/etc/kubernetes/pki/front-proxy-ca.pem -ca-key=/etc/kubernetes/pki/front-proxy-ca-key.pem -config=ca-config.json -profile=kubernetes front-proxy-client-csr.json | cfssljson -bare /etc/kubernetes/pki/front-proxy-client

2023/03/12 12:07:38 [INFO] generate received request

2023/03/12 12:07:38 [INFO] received CSR

2023/03/12 12:07:38 [INFO] generating key: rsa-2048

2023/03/12 12:07:39 [INFO] encoded CSR

2023/03/12 12:07:39 [INFO] signed certificate with serial number 510078962047596769478091699146807449605760036740

2023/03/12 12:07:39 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for

websites. For more information see the Baseline Requirements for the Issuance and Management

of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org);

specifically, section 10.2.3 ("Information Requirements").

[root@k8s2-master01 pki]# ls -rlht /etc/kubernetes/pki/

total 48K

-rw-r--r-- 1 root root 1.4K Mar 12 11:55 ca.pem

-rw------- 1 root root 1.7K Mar 12 11:55 ca-key.pem

-rw-r--r-- 1 root root 1.1K Mar 12 11:55 ca.csr

-rw-r--r-- 1 root root 1.7K Mar 12 12:02 apiserver.pem

-rw------- 1 root root 1.7K Mar 12 12:02 apiserver-key.pem

-rw-r--r-- 1 root root 1.1K Mar 12 12:02 apiserver.csr

-rw-r--r-- 1 root root 1.2K Mar 12 12:07 front-proxy-ca.pem

-rw------- 1 root root 1.7K Mar 12 12:07 front-proxy-ca-key.pem

-rw-r--r-- 1 root root 891 Mar 12 12:07 front-proxy-ca.csr

-rw-r--r-- 1 root root 1.2K Mar 12 12:07 front-proxy-client.pem

-rw------- 1 root root 1.7K Mar 12 12:07 front-proxy-client-key.pem

-rw-r--r-- 1 root root 903 Mar 12 12:07 front-proxy-client.csr

4 生成controller-manage的证书

cfssl gencert \

-ca=/etc/kubernetes/pki/ca.pem \

-ca-key=/etc/kubernetes/pki/ca-key.pem \

-config=ca-config.json \

-profile=kubernetes \

manager-csr.json | cfssljson -bare /etc/kubernetes/pki/controller-manager

检查

[root@k8s2-master01 pki]# ls -lrth

total 48K

-rw-r--r-- 1 root root 248 Dec 11 20:47 scheduler-csr.json

-rw-r--r-- 1 root root 266 Dec 11 20:47 manager-csr.json

-rw-r--r-- 1 root root 240 Dec 11 20:47 kube-proxy-csr.json

-rw-r--r-- 1 root root 235 Dec 11 20:47 kubelet-csr.json

-rw-r--r-- 1 root root 87 Dec 11 20:47 front-proxy-client-csr.json

-rw-r--r-- 1 root root 118 Dec 11 20:47 front-proxy-ca-csr.json

-rw-r--r-- 1 root root 210 Dec 11 20:47 etcd-csr.json

-rw-r--r-- 1 root root 249 Dec 11 20:47 etcd-ca-csr.json

-rw-r--r-- 1 root root 265 Dec 11 20:47 ca-csr.json

-rw-r--r-- 1 root root 294 Dec 11 20:47 ca-config.json

-rw-r--r-- 1 root root 230 Dec 11 20:47 apiserver-csr.json

-rw-r--r-- 1 root root 225 Dec 11 20:47 admin-csr.json

- 注意,如果不是高可用集群,192.168.8.236:8443改为master01的地址,8443改为apiserver的端口,默认是6443

set-cluster:设置一个集群项

kubectl config set-cluster kubernetes \

--certificate-authority=/etc/kubernetes/pki/ca.pem \

--embed-certs=true \

--server=https://192.168.8.236:8443 \

--kubeconfig=/etc/kubernetes/controller-manager.kubeconfig

设置一个环境项,一个上下文

kubectl config set-context system:kube-controller-manager@kubernetes \

--cluster=kubernetes \

--user=system:kube-controller-manager \

--kubeconfig=/etc/kubernetes/controller-manager.kubeconfig

set-credentials 设置一个用户项

kubectl config set-credentials system:kube-controller-manager \

--client-certificate=/etc/kubernetes/pki/controller-manager.pem \

--client-key=/etc/kubernetes/pki/controller-manager-key.pem \

--embed-certs=true \

--kubeconfig=/etc/kubernetes/controller-manager.kubeconfig

使用某个环境当做默认环境

kubectl config use-context system:kube-controller-manager@kubernetes \

--kubeconfig=/etc/kubernetes/controller-manager.kubeconfig

5 生成scheduler证书

cfssl gencert \

-ca=/etc/kubernetes/pki/ca.pem \

-ca-key=/etc/kubernetes/pki/ca-key.pem \

-config=ca-config.json \

-profile=kubernetes \

scheduler-csr.json | cfssljson -bare /etc/kubernetes/pki/scheduler

检查

[root@k8s2-master01 pki]# ls -rlht /etc/kubernetes/pki/scheduler*

-rw-r--r-- 1 root root 1.5K Mar 12 12:16 /etc/kubernetes/pki/scheduler.pem

-rw------- 1 root root 1.7K Mar 12 12:16 /etc/kubernetes/pki/scheduler-key.pem

-rw-r--r-- 1 root root 1.1K Mar 12 12:16 /etc/kubernetes/pki/scheduler.csr

- 注意,如果不是高可用集群,192.168.8.236:8443改为master01的地址,8443改为apiserver的端口,默认是6443

kubectl config set-cluster kubernetes \

--certificate-authority=/etc/kubernetes/pki/ca.pem \

--embed-certs=true \

--server=https://192.168.8.236:8443 \

--kubeconfig=/etc/kubernetes/scheduler.kubeconfig

kubectl config set-credentials system:kube-scheduler \

--client-certificate=/etc/kubernetes/pki/scheduler.pem \

--client-key=/etc/kubernetes/pki/scheduler-key.pem \

--embed-certs=true \

--kubeconfig=/etc/kubernetes/scheduler.kubeconfig

kubectl config set-context system:kube-scheduler@kubernetes \

--cluster=kubernetes \

--user=system:kube-scheduler \

--kubeconfig=/etc/kubernetes/scheduler.kubeconfig

kubectl config use-context system:kube-scheduler@kubernetes \

--kubeconfig=/etc/kubernetes/scheduler.kubeconfig

6 k8s admin 证书

cfssl gencert \

-ca=/etc/kubernetes/pki/ca.pem \

-ca-key=/etc/kubernetes/pki/ca-key.pem \

-config=ca-config.json \

-profile=kubernetes \

admin-csr.json | cfssljson -bare /etc/kubernetes/pki/admin

- 注意,如果不是高可用集群,192.168.8.236:8443改为master01的地址,8443改为apiserver的端口,默认是6443

kubectl config set-cluster kubernetes --certificate-authority=/etc/kubernetes/pki/ca.pem --embed-certs=true --server=https://192.168.8.236:8443 --kubeconfig=/etc/kubernetes/admin.kubeconfig

kubectl config set-credentials kubernetes-admin --client-certificate=/etc/kubernetes/pki/admin.pem --client-key=/etc/kubernetes/pki/admin-key.pem --embed-certs=true --kubeconfig=/etc/kubernetes/admin.kubeconfig

kubectl config set-context kubernetes-admin@kubernetes --cluster=kubernetes --user=kubernetes-admin --kubeconfig=/etc/kubernetes/admin.kubeconfig

kubectl config use-context kubernetes-admin@kubernetes --kubeconfig=/etc/kubernetes/admin.kubeconfig

7 创建ServiceAccount Key secret

openssl genrsa -out /etc/kubernetes/pki/sa.key 2048

openssl rsa -in /etc/kubernetes/pki/sa.key -pubout -out /etc/kubernetes/pki/sa.pub

8 发送证书至其他节点

for NODE in k8s2-master02 k8s2-master03; do

for FILE in $(ls /etc/kubernetes/pki | grep -v etcd); do

scp /etc/kubernetes/pki/${FILE} $NODE:/etc/kubernetes/pki/${FILE};

done;

for FILE in admin.kubeconfig controller-manager.kubeconfig scheduler.kubeconfig; do

scp /etc/kubernetes/${FILE} $NODE:/etc/kubernetes/${FILE};

done;

done

[root@k8s2-master01 pki]# ls /etc/kubernetes/pki/ |wc -l

23

[root@k8s2-master01 pki]# ls /etc/kubernetes/pki/

admin.csr apiserver.pem controller-manager-key.pem front-proxy-client.csr scheduler.csr

admin-key.pem ca.csr controller-manager.pem front-proxy-client-key.pem scheduler-key.pem

admin.pem ca-key.pem front-proxy-ca.csr front-proxy-client.pem scheduler.pem

apiserver.csr ca.pem front-proxy-ca-key.pem sa.key

apiserver-key.pem controller-manager.csr front-proxy-ca.pem sa.pub

3 Kubernetes系统组件配置

3.1 Etcd配置

etcd配置大致相同,注意修改每个Master节点的etcd配置的主机名和IP地址

3.1.1 Master01

[root@k8s2-master01 pki]# vim /etc/etcd/etcd.config.yml

name: 'k8s2-master01'

data-dir: /var/lib/etcd

wal-dir: /var/lib/etcd/wal

snapshot-count: 5000

heartbeat-interval: 100

election-timeout: 1000

quota-backend-bytes: 0

listen-peer-urls: 'https://192.168.8.231:2380'

listen-client-urls: 'https://192.168.8.231:2379,http://127.0.0.1:2379'

max-snapshots: 3

max-wals: 5

cors:

initial-advertise-peer-urls: 'https://192.168.8.231:2380'

advertise-client-urls: 'https://192.168.8.231:2379'

discovery:

discovery-fallback: 'proxy'

discovery-proxy:

discovery-srv:

initial-cluster: 'k8s2-master01=https://192.168.8.231:2380,k8s2-master02=https://192.168.8.232:2380,k8s2-master03=https://192.168.8.233:2380'

initial-cluster-token: 'etcd-k8s-cluster'

initial-cluster-state: 'new'

strict-reconfig-check: false

enable-v2: true

enable-pprof: true

proxy: 'off'

proxy-failure-wait: 5000

proxy-refresh-interval: 30000

proxy-dial-timeout: 1000

proxy-write-timeout: 5000

proxy-read-timeout: 0

client-transport-security:

cert-file: '/etc/kubernetes/pki/etcd/etcd.pem'

key-file: '/etc/kubernetes/pki/etcd/etcd-key.pem'

client-cert-auth: true

trusted-ca-file: '/etc/kubernetes/pki/etcd/etcd-ca.pem'

auto-tls: true

peer-transport-security:

cert-file: '/etc/kubernetes/pki/etcd/etcd.pem'

key-file: '/etc/kubernetes/pki/etcd/etcd-key.pem'

peer-client-cert-auth: true

trusted-ca-file: '/etc/kubernetes/pki/etcd/etcd-ca.pem'

auto-tls: true

debug: false

log-package-levels:

log-outputs: [default]

force-new-cluster: false

3.1.2 Master02

[root@k8s2-master02 ~]# vim /etc/etcd/etcd.config.yml

name: 'k8s2-master02'

data-dir: /var/lib/etcd

wal-dir: /var/lib/etcd/wal

snapshot-count: 5000

heartbeat-interval: 100

election-timeout: 1000

quota-backend-bytes: 0

listen-peer-urls: 'https://192.168.8.232:2380'

listen-client-urls: 'https://192.168.8.232:2379,http://127.0.0.1:2379'

max-snapshots: 3

max-wals: 5

cors:

initial-advertise-peer-urls: 'https://192.168.8.232:2380'

advertise-client-urls: 'https://192.168.8.232:2379'

discovery:

discovery-fallback: 'proxy'

discovery-proxy:

discovery-srv:

initial-cluster: 'k8s2-master01=https://192.168.8.231:2380,k8s2-master02=https://192.168.8.232:2380,k8s2-master03=https://192.168.8.233:2380'

initial-cluster-token: 'etcd-k8s-cluster'

initial-cluster-state: 'new'

strict-reconfig-check: false

enable-v2: true

enable-pprof: true

proxy: 'off'

proxy-failure-wait: 5000

proxy-refresh-interval: 30000

proxy-dial-timeout: 1000

proxy-write-timeout: 5000

proxy-read-timeout: 0

client-transport-security:

cert-file: '/etc/kubernetes/pki/etcd/etcd.pem'

key-file: '/etc/kubernetes/pki/etcd/etcd-key.pem'

client-cert-auth: true

trusted-ca-file: '/etc/kubernetes/pki/etcd/etcd-ca.pem'

auto-tls: true

peer-transport-security:

cert-file: '/etc/kubernetes/pki/etcd/etcd.pem'

key-file: '/etc/kubernetes/pki/etcd/etcd-key.pem'

peer-client-cert-auth: true

trusted-ca-file: '/etc/kubernetes/pki/etcd/etcd-ca.pem'

auto-tls: true

debug: false

log-package-levels:

log-outputs: [default]

force-new-cluster: false

3.1.2 Master03

[root@k8s2-master02 ~]# vim /etc/etcd/etcd.config.yml

name: 'k8s2-master02'

data-dir: /var/lib/etcd

wal-dir: /var/lib/etcd/wal

snapshot-count: 5000

heartbeat-interval: 100

election-timeout: 1000

quota-backend-bytes: 0

listen-peer-urls: 'https://192.168.8.232:2380'

listen-client-urls: 'https://192.168.8.232:2379,http://127.0.0.1:2379'

max-snapshots: 3

max-wals: 5

cors:

initial-advertise-peer-urls: 'https://192.168.8.232:2380'

advertise-client-urls: 'https://192.168.8.232:2379'

discovery:

discovery-fallback: 'proxy'

discovery-proxy:

discovery-srv:

initial-cluster: 'k8s2-master01=https://192.168.8.231:2380,k8s2-master02=https://192.168.8.232:2380,k8s2-master03=https://192.168.8.233:2380'

initial-cluster-token: 'etcd-k8s-cluster'

initial-cluster-state: 'new'

strict-reconfig-check: false

enable-v2: true

enable-pprof: true

proxy: 'off'

proxy-failure-wait: 5000

proxy-refresh-interval: 30000

proxy-dial-timeout: 1000

proxy-write-timeout: 5000

proxy-read-timeout: 0

client-transport-security:

cert-file: '/etc/kubernetes/pki/etcd/etcd.pem'

key-file: '/etc/kubernetes/pki/etcd/etcd-key.pem'

client-cert-auth: true

trusted-ca-file: '/etc/kubernetes/pki/etcd/etcd-ca.pem'

auto-tls: true

peer-transport-security:

cert-file: '/etc/kubernetes/pki/etcd/etcd.pem'

key-file: '/etc/kubernetes/pki/etcd/etcd-key.pem'

peer-client-cert-auth: true

trusted-ca-file: '/etc/kubernetes/pki/etcd/etcd-ca.pem'

auto-tls: true

debug: false

log-package-levels:

log-outputs: [default]

force-new-cluster: false

3.14 创建Service

所有Master节点创建etcd service并启动

[Unit]

Description=Etcd Service

Documentation=https://coreos.com/etcd/docs/latest/

After=network.target

[Service]

Type=notify

ExecStart=/usr/local/bin/etcd --config-file=/etc/etcd/etcd.config.yml

Restart=on-failure

RestartSec=10

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

Alias=etcd3.service

所有Master节点创建etcd的证书目录

mkdir /etc/kubernetes/pki/etcd

ln -s /etc/etcd/ssl/* /etc/kubernetes/pki/etcd/

systemctl daemon-reload

systemctl enable –now etcd

查看etcd状态

export ETCDCTL_API=3

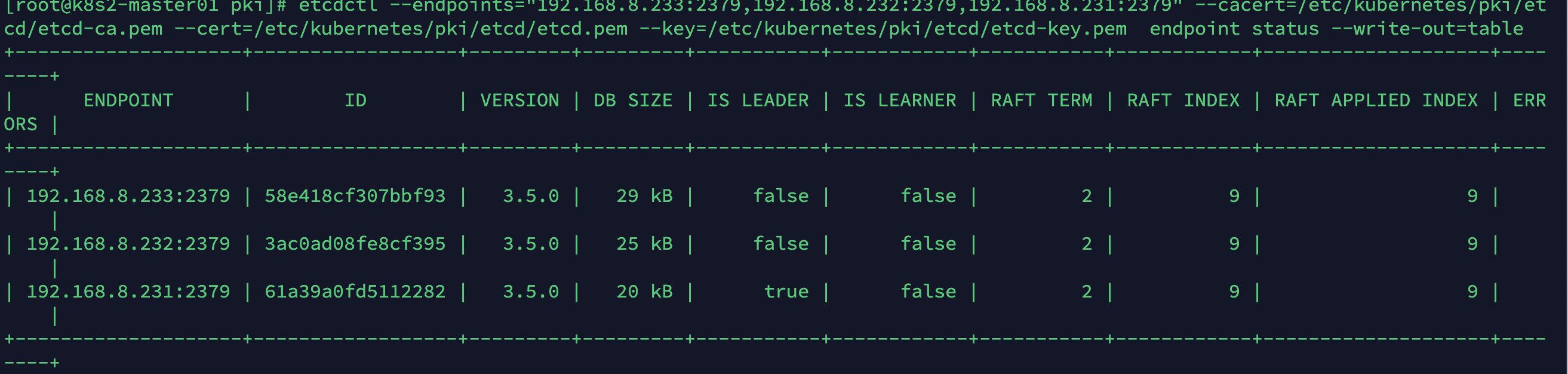

etcdctl --endpoints="192.168.8.233:2379,192.168.8.232:2379,192.168.8.231:2379" --cacert=/etc/kubernetes/pki/etcd/etcd-ca.pem --cert=/etc/kubernetes/pki/etcd/etcd.pem --key=/etc/kubernetes/pki/etcd/etcd-key.pem endpoint status --write-out=table

其中:查看那到 192.168.8.231:2379 为主节点

4 高可用配置

高可用配置(注意:如果不是高可用集群,haproxy和keepalived无需安装)

如果在云上安装也无需执行此章节的步骤,可以直接使用云上的lb,比如阿里云slb,腾讯云elb等

公有云要用公有云自带的负载均衡,比如阿里云的SLB,腾讯云的ELB,用来替代haproxy和keepalived,因为公有云大部分都是不支持keepalived的,另外如果用阿里云的话,kubectl控制端不能放在master节点,推荐使用腾讯云,因为阿里云的slb有回环的问题,也就是slb代理的服务器不能反向访问SLB,但是腾讯云修复了这个问题。

Slb -> haproxy -> apiserver

提示我这里因为是测试,是一台lb,但是生产是3台m,配置一样,前面用负载均衡代理就行。

提示:阿里云和腾讯云无法使用keepalived

[root@k8s2-lb01 ~]# yum install haproxy -y

[root@k8s2-lb01 ~]# >/etc/haproxy/haproxy.cfg

[root@k8s2-lb01 ~]# vim /etc/haproxy/haproxy.cfg

global

maxconn 2000

ulimit-n 16384

log 127.0.0.1 local0 err

stats timeout 30s

defaults

log global

mode http

option httplog

timeout connect 5000

timeout client 50000

timeout server 50000

timeout http-request 15s

timeout http-keep-alive 15s

frontend k8s-master

bind 0.0.0.0:8443

bind 127.0.0.1:8443

mode tcp

option tcplog

tcp-request inspect-delay 5s

default_backend k8s-master

backend k8s-master

mode tcp

option tcplog

option tcp-check

balance roundrobin

default-server inter 10s downinter 5s rise 2 fall 2 slowstart 60s maxconn 250 maxqueue 256 weight 100

server k8s2-master01 192.168.8.231:6443 check

server k8s2-master02 192.168.8.232:6443 check

server k8s2-master03 192.168.8.233:6443 check

在lb 节点启动 启动haproxy

systemctl daemon-reload

systemctl enable --now haproxy

查看端口

[root@k8s2-lb01 ~]# netstat -lntup|grep 8443

tcp 0 0 127.0.0.1:8443 0.0.0.0:* LISTEN 15172/haproxy

tcp 0 0 0.0.0.0:8443 0.0.0.0:* LISTEN 15172/haproxy

5 Kubernetes组件配置

所有节点创建相关目录

mkdir -p /etc/kubernetes/manifests/ /etc/systemd/system/kubelet.service.d /var/lib/kubelet /var/log/kubernetes

5.1 Apiserver

所有Master节点创建kube-apiserver

service,# 注意,如果不是高可用集群,192.168.8.236改为master01的地址

注意本文档使用的k8s service网段为10.10.0.0/16,该网段不能和宿主机的网段、Pod网段的重复,请按需修改

5.1.1 Master01配置

[root@k8s2-master01 pki]# vim /usr/lib/systemd/system/kube-apiserver.service

[Unit]

Description=Kubernetes API Server

Documentation=https://github.com/kubernetes/kubernetes

After=network.target

[Service]

ExecStart=/usr/local/bin/kube-apiserver \

--v=2 \

--logtostderr=true \

--allow-privileged=true \

--bind-address=0.0.0.0 \

--secure-port=6443 \

--insecure-port=0 \

--advertise-address=192.168.8.231 \

--service-cluster-ip-range=10.10.0.0/16 \

--service-node-port-range=30000-32767 \

--etcd-servers=https://192.168.8.231:2379,https://192.168.8.232:2379,https://192.168.8.233:2379 \

--etcd-cafile=/etc/etcd/ssl/etcd-ca.pem \

--etcd-certfile=/etc/etcd/ssl/etcd.pem \

--etcd-keyfile=/etc/etcd/ssl/etcd-key.pem \

--client-ca-file=/etc/kubernetes/pki/ca.pem \

--tls-cert-file=/etc/kubernetes/pki/apiserver.pem \

--tls-private-key-file=/etc/kubernetes/pki/apiserver-key.pem \

--kubelet-client-certificate=/etc/kubernetes/pki/apiserver.pem \

--kubelet-client-key=/etc/kubernetes/pki/apiserver-key.pem \

--service-account-key-file=/etc/kubernetes/pki/sa.pub \

--service-account-signing-key-file=/etc/kubernetes/pki/sa.key \

--service-account-issuer=https://kubernetes.default.svc.cluster.local \

--kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname \

--enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,DefaultStorageClass,DefaultTolerationSeconds,NodeRestriction,ResourceQuota \

--authorization-mode=Node,RBAC

--service-cluster-ip-range=10.10.0.0/16 \

--service-node-port-range=30000-32767 \

--etcd-servers=https://192.168.8.231:2379,https://192.168.8.232:2379,https://192.168.8.233:2379 \

--etcd-cafile=/etc/etcd/ssl/etcd-ca.pem \

--etcd-certfile=/etc/etcd/ssl/etcd.pem \

--etcd-keyfile=/etc/etcd/ssl/etcd-key.pem \

--client-ca-file=/etc/kubernetes/pki/ca.pem \

--tls-cert-file=/etc/kubernetes/pki/apiserver.pem \

--tls-private-key-file=/etc/kubernetes/pki/apiserver-key.pem \

--kubelet-client-certificate=/etc/kubernetes/pki/apiserver.pem \

--kubelet-client-key=/etc/kubernetes/pki/apiserver-key.pem \

--service-account-key-file=/etc/kubernetes/pki/sa.pub \

--service-account-signing-key-file=/etc/kubernetes/pki/sa.key \

--service-account-issuer=https://kubernetes.default.svc.cluster.local \

--kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname \

--enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,DefaultStorageClass,DefaultTolerationSeconds,NodeRestriction,ResourceQuota \

--authorization-mode=Node,RBAC \

--enable-bootstrap-token-auth=true \

--requestheader-client-ca-file=/etc/kubernetes/pki/front-proxy-ca.pem \

--proxy-client-cert-file=/etc/kubernetes/pki/front-proxy-client.pem \

--proxy-client-key-file=/etc/kubernetes/pki/front-proxy-client-key.pem \

--requestheader-allowed-names=aggregator \

--requestheader-group-headers=X-Remote-Group \

--requestheader-extra-headers-prefix=X-Remote-Extra- \

--requestheader-username-headers=X-Remote-User

# --token-auth-file=/etc/kubernetes/token.csv

Restart=on-failure

RestartSec=10s

LimitNOFILE=65535

[Install]

WantedBy=multi-user.target

5.1.1 Master02配置

[root@k8s2-master02 ~]# vim /usr/lib/systemd/system/kube-apiserver.service

[Unit]

Description=Kubernetes API Server

Documentation=https://github.com/kubernetes/kubernetes

After=network.target

[Service]

ExecStart=/usr/local/bin/kube-apiserver \

--v=2 \

--logtostderr=true \

--allow-privileged=true \

--bind-address=0.0.0.0 \

--secure-port=6443 \

--insecure-port=0 \

--advertise-address=192.168.8.232 \

--service-cluster-ip-range=10.10.0.0/16 \

--service-node-port-range=30000-32767 \

--etcd-servers=https://192.168.8.231:2379,https://192.168.8.232:2379,https://192.168.8.233:2379 \

--etcd-cafile=/etc/etcd/ssl/etcd-ca.pem \

--etcd-certfile=/etc/etcd/ssl/etcd.pem \

--etcd-keyfile=/etc/etcd/ssl/etcd-key.pem \

--client-ca-file=/etc/kubernetes/pki/ca.pem \

--tls-cert-file=/etc/kubernetes/pki/apiserver.pem \

--tls-private-key-file=/etc/kubernetes/pki/apiserver-key.pem \

--kubelet-client-certificate=/etc/kubernetes/pki/apiserver.pem \

--kubelet-client-key=/etc/kubernetes/pki/apiserver-key.pem \

--service-account-key-file=/etc/kubernetes/pki/sa.pub \

--service-account-signing-key-file=/etc/kubernetes/pki/sa.key \

--service-account-issuer=https://kubernetes.default.svc.cluster.local \

--kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname \

--enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,DefaultStorageClass,DefaultTolerationSeconds,NodeRestriction,ResourceQuota \

--authorization-mode=Node,RBAC \

--enable-bootstrap-token-auth=true \

--requestheader-client-ca-file=/etc/kubernetes/pki/front-proxy-ca.pem \

--proxy-client-cert-file=/etc/kubernetes/pki/front-proxy-client.pem \

--proxy-client-key-file=/etc/kubernetes/pki/front-proxy-client-key.pem \

--requestheader-allowed-names=aggregator \

--requestheader-group-headers=X-Remote-Group \

--requestheader-extra-headers-prefix=X-Remote-Extra- \

--requestheader-username-headers=X-Remote-User

# --token-auth-file=/etc/kubernetes/token.csv

Restart=on-failure

RestartSec=10s

LimitNOFILE=65535

[Install]

WantedBy=multi-user.target

5.1.1 Master02配置

[root@k8s2-master03 ~]# vim /usr/lib/systemd/system/kube-apiserver.service

[Unit]

Description=Kubernetes API Server

Documentation=https://github.com/kubernetes/kubernetes

After=network.target

[Service]

ExecStart=/usr/local/bin/kube-apiserver \

--v=2 \

--logtostderr=true \

--allow-privileged=true \

--bind-address=0.0.0.0 \

--secure-port=6443 \

--insecure-port=0 \

--advertise-address=192.168.8.233 \

--service-cluster-ip-range=10.10.0.0/16 \

--service-node-port-range=30000-32767 \

--etcd-servers=https://192.168.8.231:2379,https://192.168.8.232:2379,https://192.168.8.233:2379 \

--etcd-cafile=/etc/etcd/ssl/etcd-ca.pem \

--etcd-certfile=/etc/etcd/ssl/etcd.pem \

--etcd-keyfile=/etc/etcd/ssl/etcd-key.pem \

--client-ca-file=/etc/kubernetes/pki/ca.pem \

--tls-cert-file=/etc/kubernetes/pki/apiserver.pem \

--tls-private-key-file=/etc/kubernetes/pki/apiserver-key.pem \

--kubelet-client-certificate=/etc/kubernetes/pki/apiserver.pem \

--kubelet-client-key=/etc/kubernetes/pki/apiserver-key.pem \

--service-account-key-file=/etc/kubernetes/pki/sa.pub \

--service-account-signing-key-file=/etc/kubernetes/pki/sa.key \

--service-account-issuer=https://kubernetes.default.svc.cluster.local \

--kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname \

--enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,DefaultStorageClass,DefaultTolerationSeconds,NodeRestriction,ResourceQuota \

--authorization-mode=Node,RBAC \

--enable-bootstrap-token-auth=true \

--requestheader-client-ca-file=/etc/kubernetes/pki/front-proxy-ca.pem \

--proxy-client-cert-file=/etc/kubernetes/pki/front-proxy-client.pem \

--proxy-client-key-file=/etc/kubernetes/pki/front-proxy-client-key.pem \

--requestheader-allowed-names=aggregator \

--requestheader-group-headers=X-Remote-Group \

--requestheader-extra-headers-prefix=X-Remote-Extra- \

--requestheader-username-headers=X-Remote-User

# --token-auth-file=/etc/kubernetes/token.csv

Restart=on-failure

RestartSec=10s

LimitNOFILE=65535

[Install]

WantedBy=multi-user.target

5.1.2 启动apiserver

所有Master节点开启kube-apiserver

systemctl daemon-reload && systemctl enable –now kube-apiserver

检测kube-server状态

systemctl status kube-apiserver

5.2 配置 ControllerManager

所有Master节点配置kube-controller-manager service(所有master节点配置一样)

注意本文档使用的k8s Pod网段为172.16.0.0/12,该网段不能和宿主机的网段、k8s Service网段的重复,请按需修改

[root@k8s2-master01 pki]# vim /usr/lib/systemd/system/kube-controller-manager.service

kube-controller-manager.service

[Unit]

Description=Kubernetes Controller Manager

Documentation=https://github.com/kubernetes/kubernetes

After=network.target

[Service]

ExecStart=/usr/local/bin/kube-controller-manager \

--v=2 \

--logtostderr=true \

--address=127.0.0.1 \

--root-ca-file=/etc/kubernetes/pki/ca.pem \

--cluster-signing-cert-file=/etc/kubernetes/pki/ca.pem \

--cluster-signing-key-file=/etc/kubernetes/pki/ca-key.pem \

--service-account-private-key-file=/etc/kubernetes/pki/sa.key \

--kubeconfig=/etc/kubernetes/controller-manager.kubeconfig \

--leader-elect=true \

--use-service-account-credentials=true \

--node-monitor-grace-period=40s \

--node-monitor-period=5s \

--pod-eviction-timeout=2m0s \

--controllers=*,bootstrapsigner,tokencleaner \

--allocate-node-cidrs=true \

--cluster-cidr=172.16.0.0/12 \

--requestheader-client-ca-file=/etc/kubernetes/pki/front-proxy-ca.pem \

--node-cidr-mask-size=24

Restart=always

RestartSec=10s

[Install]

WantedBy=multi-user.target

所有Master节点启动kube-controller-manager

systemctl daemon-reload

systemctl enable --now kube-controller-manager

查看启动状态

systemctl status kube-controller-manager

5.3 配置Scheduler

所有Master节点配置kube-scheduler service(所有master节点配置一样)

[root@k8s2-master01 pki]# vim /usr/lib/systemd/system/kube-scheduler.service

[Unit]

Description=Kubernetes Scheduler

Documentation=https://github.com/kubernetes/kubernetes

After=network.target

[Service]

ExecStart=/usr/local/bin/kube-scheduler \

--v=2 \

--logtostderr=true \

--address=127.0.0.1 \

--leader-elect=true \

--kubeconfig=/etc/kubernetes/scheduler.kubeconfig

Restart=always

RestartSec=10s

[Install]

WantedBy=multi-user.target

启动查看状态

systemctl daemon-reload

systemctl enable --now kube-scheduler

systemctl status kube-scheduler

5.4 TLS Bootstrapping配置

1 只需要在Master01创建bootstrap

* 注意,如果不是高可用集群,192.168.8.236:8443改为master01的地址,8443改为apiserver的端口,默认是6443

[root@k8s2-master01 bootstrap]# cd /root/k8s-ha-install-manual-installation-v1.22.x/bootstrap

2 执行命令

kubectl config set-cluster kubernetes --certificate-authority=/etc/kubernetes/pki/ca.pem --embed-certs=true --server=https://192.168.8.236:8443 --kubeconfig=/etc/kubernetes/bootstrap-kubelet.kubeconfig

kubectl config set-credentials tls-bootstrap-token-user --token=c8ad9c.2e4d610cf3e7426e --kubeconfig=/etc/kubernetes/bootstrap-kubelet.kubeconfig

kubectl config set-context tls-bootstrap-token-user@kubernetes --cluster=kubernetes --user=tls-bootstrap-token-user --kubeconfig=/etc/kubernetes/bootstrap-kubelet.kubeconfig

kubectl config use-context tls-bootstrap-token-user@kubernetes --kubeconfig=/etc/kubernetes/bootstrap-kubelet.kubeconfig

注意:如果要修改bootstrap.secret.yaml的token-id和token-secret,需要保证下图红圈内的字符串一致的,并且位数是一样的。还要保证上个命令的黄色字体:c8ad9c.2e4d610cf3e7426e与你修改的字符串要一致

3 只在master1 安装 kubectl

[root@k8s2-master01 bootstrap]# mkdir -p /root/.kube ; cp /etc/kubernetes/admin.kubeconfig /root/.kube/config

可以正常查询集群状态,才可以继续往下,否则不行,需要排查k8s组件是否有故障

[root@k8s2-master01 bootstrap]# kubectl get cs

Warning: v1 ComponentStatus is deprecated in v1.19+

NAME STATUS MESSAGE ERROR

scheduler Healthy ok

controller-manager Healthy ok

etcd-1 Healthy {"health":"true","reason":""}

etcd-0 Healthy {"health":"true","reason":""}

etcd-2 Healthy {"health":"true","reason":""}

4 最后我们创建

[root@k8s2-master01 bootstrap]# kubectl create -f bootstrap.secret.yaml

secret/bootstrap-token-c8ad9c created

clusterrolebinding.rbac.authorization.k8s.io/kubelet-bootstrap created

clusterrolebinding.rbac.authorization.k8s.io/node-autoapprove-bootstrap created

clusterrolebinding.rbac.authorization.k8s.io/node-autoapprove-certificate-rotation created

clusterrole.rbac.authorization.k8s.io/system:kube-apiserver-to-kubelet created

clusterrolebinding.rbac.authorization.k8s.io/system:kube-apiserver created

5.5 Node节点配置

5.5.1 复制证书

Master01节点复制证书至Node节点

cd /etc/kubernetes/

for NODE in k8s2-master02 k8s2-master03 k8s2-node01 k8s2-node02; do

ssh $NODE mkdir -p /etc/kubernetes/pki /etc/etcd/ssl /etc/etcd/ssl

for FILE in etcd-ca.pem etcd.pem etcd-key.pem; do

scp /etc/etcd/ssl/$FILE $NODE:/etc/etcd/ssl/

done

for FILE in pki/ca.pem pki/ca-key.pem pki/front-proxy-ca.pem bootstrap-kubelet.kubeconfig; do

scp /etc/kubernetes/$FILE $NODE:/etc/kubernetes/${FILE}

done

done

5.5.2 Kubelet配置

所有节点创建相关目录

mkdir -p /var/lib/kubelet /var/log/kubernetes /etc/systemd/system/kubelet.service.d /etc/kubernetes/manifests/

所有节点配置kubelet service

vim /usr/lib/systemd/system/kubelet.service

[Unit]

Description=Kubernetes Kubelet

Documentation=https://github.com/kubernetes/kubernetes

After=docker.service

Requires=docker.service

[Service]

ExecStart=/usr/local/bin/kubelet

Restart=always

StartLimitInterval=0

RestartSec=10

[Install]

WantedBy=multi-user.target

所有节点配置kubelet service的配置文件

vim /usr/lib/systemd/system/kubelet.service

[Unit]

Description=Kubernetes Kubelet

Documentation=https://github.com/kubernetes/kubernetes

After=docker.service

Requires=docker.service

[Service]

ExecStart=/usr/local/bin/kubelet

Restart=always

StartLimitInterval=0

RestartSec=10

[Install]

WantedBy=multi-user.target

[root@k8s2-master01 kubernetes]# vim /etc/systemd/system/kubelet.service.d/10-kubelet.conf

[root@k8s2-master01 kubernetes]# cat /etc/systemd/system/kubelet.service.d/10-kubelet.conf

[Service]

Environment="KUBELET_KUBECONFIG_ARGS=--bootstrap-kubeconfig=/etc/kubernetes/bootstrap-kubelet.kubeconfig --kubeconfig=/etc/kubernetes/kubelet.kubeconfig"

Environment="KUBELET_SYSTEM_ARGS=--network-plugin=cni --cni-conf-dir=/etc/cni/net.d --cni-bin-dir=/opt/cni/bin"

Environment="KUBELET_CONFIG_ARGS=--config=/etc/kubernetes/kubelet-conf.yml --pod-infra-container-image=registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.5"

Environment="KUBELET_EXTRA_ARGS=--node-labels=node.kubernetes.io/node='' "

ExecStart=

ExecStart=/usr/local/bin/kubelet $KUBELET_KUBECONFIG_ARGS $KUBELET_CONFIG_ARGS $KUBELET_SYSTEM_ARGS $KUBELET_EXTRA_ARGS

创建kubelet的配置文件

注意:如果更改了k8s的service网段,需要更改kubelet-conf.yml 的clusterDNS:配置,改成k8s Service网段的第十个地址,比如10.10.0.10

vim /etc/kubernetes/kubelet-conf.yml

apiVersion: kubelet.config.k8s.io/v1beta1

kind: KubeletConfiguration

address: 0.0.0.0

port: 10250

readOnlyPort: 10255

authentication:

anonymous:

enabled: false

webhook:

cacheTTL: 2m0s

enabled: true

x509:

clientCAFile: /etc/kubernetes/pki/ca.pem

authorization:

mode: Webhook

webhook:

cacheAuthorizedTTL: 5m0s

cacheUnauthorizedTTL: 30s

cgroupDriver: systemd

cgroupsPerQOS: true

clusterDNS:

- 10.10.0.10

clusterDomain: cluster.local

containerLogMaxFiles: 5

containerLogMaxSize: 10Mi

contentType: application/vnd.kubernetes.protobuf

cpuCFSQuota: true

cpuManagerPolicy: none

cpuManagerReconcilePeriod: 10s

enableControllerAttachDetach: true

enableDebuggingHandlers: true

enforceNodeAllocatable:

- pods

eventBurst: 10

eventRecordQPS: 5

evictionHard:

imagefs.available: 15%

memory.available: 100Mi

nodefs.available: 10%

nodefs.inodesFree: 5%

evictionPressureTransitionPeriod: 5m0s

failSwapOn: true

fileCheckFrequency: 20s

hairpinMode: promiscuous-bridge

healthzBindAddress: 127.0.0.1

healthzPort: 10248

httpCheckFrequency: 20s

imageGCHighThresholdPercent: 85

imageGCLowThresholdPercent: 80

imageMinimumGCAge: 2m0s

iptablesDropBit: 15

iptablesMasqueradeBit: 14

kubeAPIBurst: 10

kubeAPIQPS: 5

makeIPTablesUtilChains: true

maxOpenFiles: 1000000

maxPods: 110

nodeStatusUpdateFrequency: 10s

oomScoreAdj: -999

podPidsLimit: -1

registryBurst: 10

registryPullQPS: 5

resolvConf: /etc/resolv.conf

rotateCertificates: true

runtimeRequestTimeout: 2m0s

serializeImagePulls: true

staticPodPath: /etc/kubernetes/manifests

streamingConnectionIdleTimeout: 4h0m0s

syncFrequency: 1m0s

volumeStatsAggPeriod: 1m0s

启动所有节点kubelet

systemctl daemon-reload

systemctl enable --now kubelet

产看结果

systemctl status kubelet

查看集群状态

[root@k8s2-master01 kubernetes]# kubectl get node

NAME STATUS ROLES AGE VERSION

k8s2-master01 NotReady <none> 51s v1.22.0

k8s2-master02 NotReady <none> 48s v1.22.0

k8s2-master03 NotReady <none> 47s v1.22.0

k8s2-node01 NotReady <none> 46s v1.22.0

k8s2-node02 NotReady <none> 45s v1.22.0

5.6 kube-proxy配置

注意,如果不是高可用集群,192.168.8.236:8443改为master01的地址,8443改为apiserver的端口,默认是6443

以下操作只在Master01执行

[root@k8s2-master01 kubernetes]# cd /root/k8s-ha-install-manual-installation-v1.22.x

kubectl -n kube-system create serviceaccount kube-proxy

kubectl create clusterrolebinding system:kube-proxy --clusterrole system:node-proxier --serviceaccount kube-system:kube-proxy

SECRET=$(kubectl -n kube-system get sa/kube-proxy \

--output=jsonpath='{.secrets[0].name}')

JWT_TOKEN=$(kubectl -n kube-system get secret/$SECRET \

--output=jsonpath='{.data.token}' | base64 -d)

PKI_DIR=/etc/kubernetes/pki

K8S_DIR=/etc/kubernetes

kubectl config set-cluster kubernetes --certificate-authority=/etc/kubernetes/pki/ca.pem --embed-certs=true --server=https://192.168.8.236:8443 --kubeconfig=${K8S_DIR}/kube-proxy.kubeconfig

kubectl config set-credentials kubernetes --token=${JWT_TOKEN} --kubeconfig=/etc/kubernetes/kube-proxy.kubeconfig

kubectl config set-context kubernetes --cluster=kubernetes --user=kubernetes --kubeconfig=/etc/kubernetes/kube-proxy.kubeconfig

kubectl config use-context kubernetes --kubeconfig=/etc/kubernetes/kube-proxy.kubeconfig

将kubeconfig发送至其他节点

for NODE in k8s2-master02 k8s2-master03; do

scp /etc/kubernetes/kube-proxy.kubeconfig $NODE:/etc/kubernetes/kube-proxy.kubeconfig

done

for NODE in k8s2-node01 k8s2-node02;

do

scp /etc/kubernetes/kube-proxy.kubeconfig $NODE:/etc/kubernetes/kube-proxy.kubeconfig

done

所有节点添加kube-proxy的配置和service文件:

vim /usr/lib/systemd/system/kube-proxy.service

[Unit]

Description=Kubernetes Kube Proxy

Documentation=https://github.com/kubernetes/kubernetes

After=network.target

[Service]

ExecStart=/usr/local/bin/kube-proxy \

--config=/etc/kubernetes/kube-proxy.yaml \

--v=2

Restart=always

RestartSec=10s

[Install]

WantedBy=multi-user.target

如果更改了集群Pod的网段,需要更改kube-proxy.yaml的clusterCIDR为自己的Pod网段:

vim /etc/kubernetes/kube-proxy.yaml

apiVersion: kubeproxy.config.k8s.io/v1alpha1

bindAddress: 0.0.0.0

clientConnection:

acceptContentTypes: ""

burst: 10

contentType: application/vnd.kubernetes.protobuf

kubeconfig: /etc/kubernetes/kube-proxy.kubeconfig

qps: 5

clusterCIDR: 172.16.0.0/12

configSyncPeriod: 15m0s

conntrack:

max: null

maxPerCore: 32768

min: 131072

tcpCloseWaitTimeout: 1h0m0s

tcpEstablishedTimeout: 24h0m0s

enableProfiling: false

healthzBindAddress: 0.0.0.0:10256

hostnameOverride: ""

iptables:

masqueradeAll: false

masqueradeBit: 14

minSyncPeriod: 0s

syncPeriod: 30s

ipvs:

masqueradeAll: true

minSyncPeriod: 5s

scheduler: "rr"

syncPeriod: 30s

kind: KubeProxyConfiguration

metricsBindAddress: 127.0.0.1:10249

mode: "ipvs"

nodePortAddresses: null

oomScoreAdj: -999

portRange: ""

udpIdleTimeout: 250ms

所有节点启动kube-proxy

systemctl daemon-reload

systemctl enable --now kube-proxy

systemctl status kube-proxy

6 安装Calico

6.1安装官方推荐版本(推荐)

1 以下步骤只在master01执行

cd /root/k8s-ha-install-manual-installation-v1.22.x/calico

2 更改calico的网段,主要需要将红色部分的网段,改为自己的Pod网段

sed -i “s#POD_CIDR#172.16.0.0/12#g” calico.yaml

3 查看

[root@k8s2-master01 calico]# grep "CALICO_IPV4POOL_CIDR" -A 1 calico.yaml

- name: CALICO_IPV4POOL_CIDR

value: "172.16.0.0/12"

4 执行

kubectl apply -f calico.yaml

5 查看容器状态

[root@k8s2-master01 calico]# kubectl get po -n kube-system

NAME READY STATUS RESTARTS AGE

calico-kube-controllers-66686fdb54-z5l7z 1/1 Running 0 3m4s

calico-node-54b2x 1/1 Running 0 3m4s

calico-node-9zz7x 1/1 Running 0 3m4s

calico-node-bsmml 1/1 Running 0 3m4s

calico-node-lgzw8 1/1 Running 0 3m4s

calico-node-rf5wz 1/1 Running 0 3m4s

calico-typha-67c6dc57d6-dd45r 1/1 Running 0 3m4s

calico-typha-67c6dc57d6-w4bpv 1/1 Running 0 3m4s

calico-typha-67c6dc57d6-xxrdd 1/1 Running 0 3m4s

如果容器状态异常可以使用kubectl describe 或者kubectl logs查看容器的日志

查看集群

[root@k8s2-master01 calico]# kubectl get node

NAME STATUS ROLES AGE VERSION

k8s2-master01 Ready <none> 25m v1.22.0

k8s2-master02 Ready <none> 25m v1.22.0

k8s2-master03 Ready <none> 25m v1.22.0

k8s2-node01 Ready <none> 25m v1.22.0

k8s2-node02 Ready <none> 25m v1.22.0

7 安装CoreDNS

如果更改了k8s service的网段需要将coredns的serviceIP改成k8s service网段的第十个IP

cd /root/k8s-ha-install-manual-installation-v1.22.x/CoreDNS

COREDNS_SERVICE_IP=`kubectl get svc | grep kubernetes | awk '{print $3}'`

查看

[root@k8s2-master01 CoreDNS]# grep 192.168.0.10 coredns.yaml

clusterIP: 192.168.0.10

替换

[root@k8s2-master01 CoreDNS]# sed -i "s#10.10.0.10#${COREDNS_SERVICE_IP}#g" coredns.yaml

安装

[root@k8s2-master01 CoreDNS]# kubectl create -f coredns.yaml

serviceaccount/coredns created

clusterrole.rbac.authorization.k8s.io/system:coredns created

clusterrolebinding.rbac.authorization.k8s.io/system:coredns created

configmap/coredns created

deployment.apps/coredns created

查看状态

[root@k8s2-master01 CoreDNS]# kubectl get po -n kube-system -l k8s-app=kube-dns

NAME READY STATUS RESTARTS AGE

coredns-7684f7549-8kwvk 1/1 Running 0 98s

8 安装Metrics Server

在新版的Kubernetes中系统资源的采集均使用Metrics-server,可以通过Metrics采集节点和Pod的内存、磁盘、CPU和网络的使用率。

cd /root/k8s-ha-install-manual-installation-v1.22.x/metrics-server

kubectl create -f comp.yaml

等待metrics server启动然后查看状态

[root@k8s2-master01 metrics-server]# kubectl get pod -n kube-system

[root@k8s2-master01 metrics-server]# kubectl top node

NAME CPU(cores) CPU% MEMORY(bytes) MEMORY%

k8s2-master01 131m 6% 2231Mi 58%

k8s2-master02 102m 5% 1572Mi 41%

k8s2-master03 107m 5% 1658Mi 40%

k8s2-node01 341m 8% 1037Mi 13%

k8s2-node02 50m 1% 996Mi 12%

提示:如果使用最新版 Metrics Server

comp.yaml 中需要添加证书

containers:

- args:

- --cert-dir=/tmp

- --secure-port=4443

- --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname

- --kubelet-use-node-status-port

- --metric-resolution=15s

- --kubelet-insecure-tls

- --requestheader-client-ca-file=/etc/kubernetes/pki/front-proxy-ca.pem # change to front-proxy-ca.crt for kubeadm

- --requestheader-username-headers=X-Remote-User

- --requestheader-group-headers=X-Remote-Group

- --requestheader-extra-headers-prefix=X-Remote-Extra-

9 集群验证

创建一个pod

cat<<EOF | kubectl apply -f -

apiVersion: v1

kind: Pod

metadata:

name: busybox

namespace: default

spec:

containers:

- name: busybox

image: busybox:1.28

command:

- sleep

- "3600"

imagePullPolicy: IfNotPresent

restartPolicy: Always

EOF

- Pod必须能解析Service

[root@k8s2-master01 CoreDNS]# kubectl exec busybox -n default -- nslookup kubernetes Server: 10.10.0.10 Address 1: 10.10.0.10 kube-dns.kube-system.svc.cluster.local Name: kubernetes Address 1: 10.10.0.1 kubernetes.default.svc.cluster.local

进入 busybox ping 下百度

[root@k8s2-master01 CoreDNS]# kubectl exec -it busybox /bin/sh

kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead.

/ # ping www.baidu.com

PING www.baidu.com (110.242.68.4): 56 data bytes

64 bytes from 110.242.68.4: seq=0 ttl=52 time=12.878 ms

- Pod必须能解析跨namespace的Service

[root@k8s2-master01 CoreDNS]# kubectl exec busybox -n default — nslookup kube-dns.kube-system

Server: 10.10.0.10

Address 1: 10.10.0.10 kube-dns.kube-system.svc.cluster.local

Name: kube-dns.kube-system

Address 1: 10.10.0.10 kube-dns.kube-system.svc.cluster.local

- 每个节点都必须要能访问Kubernetes的kubernetes svc 443和kube-dns的service 53

[root@k8s2-master01 CoreDNS]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.10.0.1 <none> 443/TCP 129m

[root@k8s2-master01 CoreDNS]# kubectl get svc -n kube-system

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

calico-typha ClusterIP 10.10.196.60 <none> 5473/TCP 48m

kube-dns ClusterIP 10.10.0.10 <none> 53/UDP,53/TCP,9153/TCP 5m50s

metrics-server ClusterIP 10.10.224.158 <none> 443/TCP 36m

在 master和node 节点 telnet

[root@k8s2-master02 ~]# telnet 10.10.0.1 443

Trying 10.10.0.1…

Connected to 10.10.0.1.

Escape character is ‘^]’.

^ZConnection closed by foreign host.

[root@k8s2-master02 ~]# telnet 10.10.0.10 53

Trying 10.10.0.10…

Connected to 10.10.0.10.

Escape character is ‘^]’.

- Pod和Pod之前要能通

a) 同namespace能通信

b) 跨namespace能通信

c) 跨机器能通信

10 安装dashboard

[root@k8s2-master01 dashboard]# cd /root/k8s-ha-install-manual-installation-v1.22.x/dashboard

kubectl create -f .

安装后查看 kubernetes-dashboard NodePort svc 为31472

[root@k8s2-master01 dashboard]# kubectl get svc -A -o wide

NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

default kubernetes ClusterIP 10.10.0.1 <none> 443/TCP 101m <none>

kube-system calico-typha ClusterIP 10.10.196.60 <none> 5473/TCP 19m k8s-app=calico-typha

kube-system metrics-server ClusterIP 10.10.224.158 <none> 443/TCP 6m54s k8s-app=metrics-server

kubernetes-dashboard dashboard-metrics-scraper ClusterIP 10.10.132.4 <none> 8000/TCP 96s k8s-app=dashboard-metrics-scraper

kubernetes-dashboard kubernetes-dashboard NodePort 10.10.181.55 <none> 443:31472/TCP 96s k8s-app=kubernetes-dashboard

任意访问一个node地址

https://192.168.8.234:31472

查看token值:

[root@k8s2-master01 dashboard]# kubectl -n kube-system describe secret $(kubectl -n kube-system get secret | grep admin-user | awk '{print $1}')

Name: admin-user-token-2ndcg

Namespace: kube-system

Labels: <none>

Annotations: kubernetes.io/service-account.name: admin-user

kubernetes.io/service-account.uid: bfcf43b8-639d-4f47-9f85-623791de1636

Type: kubernetes.io/service-account-token

Data

====

ca.crt: 1411 bytes

namespace: 11 bytes

token: eyJhbGciOiJSUzI1NiIsImtpZCI6Imo3V0h2QWdfd3NJbHpTQ3BlNTQ4MEZZeU5rb1NMdFlrWC1iZ1R2bHlmVW8ifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJhZG1pbi11c2VyLXRva2VuLTJuZGNnIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQubmFtZSI6ImFkbWluLXVzZXIiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC51aWQiOiJiZmNmNDNiOC02MzlkLTRmNDctOWY4NS02MjM3OTFkZTE2MzYiLCJzdWIiOiJzeXN0ZW06c2VydmljZWFjY291bnQ6a3ViZS1zeXN0ZW06YWRtaW4tdXNlciJ9.NmKqrx75FH8JwXd30ASDuIjuUM7gDJdXIRKKwukBIaRQ7QUlmiZt9jjxvGfT7N2cKqNgJ6P74IE3uW-FL9fi5CVb9w5iJ-MzOMx5a_9of1y8vfFIpnOzCATkhOdWHOCRxUWAkf3mTdCF3pa8NXkLSjci7VMn0tsNCU8fjC71V1sWkf0DwTMufRIGv2HkSEhYCZ421G1Mr6DTh6f76TFFIYff-HJ2pxOx_GLbO1ZcZ27XPywO6QrnHoqf8h0QKkK6X7teLoPNz2Zbv2IB3v25oT8LVJ3B4wwm9GjaxSTJlSE3G6I-migtlPlx6RM44tPlitf0IFeRY3Obe-7W_tcfcw

Token 值是

eyJhbGciOiJSUzI1NiIsImtpZCI6Imo3V0h2QWdfd3NJbHpTQ3BlNTQ4MEZZeU5rb1NMdFlrWC1iZ1R2bHlmVW8ifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJhZG1pbi11c2VyLXRva2VuLTJuZGNnIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQubmFtZSI6ImFkbWluLXVzZXIiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC51aWQiOiJiZmNmNDNiOC02MzlkLTRmNDctOWY4NS02MjM3OTFkZTE2MzYiLCJzdWIiOiJzeXN0ZW06c2VydmljZWFjY291bnQ6a3ViZS1zeXN0ZW06YWRtaW4tdXNlciJ9.NmKqrx75FH8JwXd30ASDuIjuUM7gDJdXIRKKwukBIaRQ7QUlmiZt9jjxvGfT7N2cKqNgJ6P74IE3uW-FL9fi5CVb9w5iJ-MzOMx5a_9of1y8vfFIpnOzCATkhOdWHOCRxUWAkf3mTdCF3pa8NXkLSjci7VMn0tsNCU8fjC71V1sWkf0DwTMufRIGv2HkSEhYCZ421G1Mr6DTh6f76TFFIYff-HJ2pxOx_GLbO1ZcZ27XPywO6QrnHoqf8h0QKkK6X7teLoPNz2Zbv2IB3v25oT8LVJ3B4wwm9GjaxSTJlSE3G6I-migtlPlx6RM44tPlitf0IFeRY3Obe-7W_tcfcw

将token值输入到令牌后,单击登录即可访问Dashboard

11 生产环境关键性配置

11.1 docker 优化

[root@k8s2-master01 CoreDNS]# vim /etc/docker/daemon.json

[root@k8s2-master01 CoreDNS]# cat /etc/docker/daemon.json

{

"exec-opts": ["native.cgroupdriver=systemd"],

"registry-mirrors": ["https://zy7d25a7.mirror.aliyuncs.com"],

"max-concurrent-downloads": 10,

"max-concurrent-uploads": 5,

"log-opts": {

"max-size": "300m",

"max-file": "2" },

"live-restore": true

}

[root@k8s2-master01 CoreDNS]# systemctl daemon-reload

[root@k8s2-master01 CoreDNS]# systemctl restart docker

ystemctl status docker

- log-opts”: {

“max-size”: “300m”,

“max-file”: “2” },

max-size 最大数值,必须大于0

max-file 最大日志数,必须大于0

在Docker容器不重建的情况下,日志文件会默认一直追加,时间长会慢慢的占满服务器硬盘空间。其实就是我们常用的docker logs命令打印的日志会打印到这个目录下的文件之中

- “live-restore”: true

默认情况下,当 Docker 守护进程终止时,它将关闭正在运行的容器。您可以配置守护程序,以便容器在守护程序不可用时保持运行。此功能称为live-restore。live-restore选项有助于减少由于守护进程崩溃、计划中断或升级而导致的容器停机时间。

在工作中,假如修改了docker的配置而需要重新加载docker守护进程,导致docker容器重启,业务会中断一会,尤其是在生产环境,存在一定的风险。这种情况下,可以启用live-restore功能,以在守护进程不可用时使容器保持活动状态

- max-concurrent-downloads

增加并行下载数量

镜像拉取(下载)也是 HTTP 下载,因此也应该支持并行下载,而官方也提供 max-concurrent-downloads 参数支持

但是注意:docker pull 是一个占用网络IO、磁盘IO、cpu负载的综合型操作,这个数值,最好根据节点配置和镜像大小、网络综合考虑。

11.2 开启证书轮换的配置

kubelet 启动时增加 –feature-gates=RotateKubeletClientCertificate=true,RotateKubeletServerCertificate=true 选项,则 kubelet 在证书即将到期时会自动发起一个 renew 自己证书的 CSR 请求;增加–rotate-certificates 参数,kubelet 会自动重载新证书;

同时 controller manager 需要在启动时增加 –feature-gates=RotateKubeletServerCertificate=true 参数,再配合上面创建好的 ClusterRoleBinding,kubelet client 和 kubelet server 证才书会被自动签署;

证书过期时间

TLS bootstrapping 时的证书实际是由 kube-controller-manager 组件来签署的,也就是说证书有效期是 kube-controller-manager 组件控制的;kube-controller-manager 组件提供了一个 –experimental-cluster-signing-duration 参数来设置签署的

vim /usr/lib/systemd/system/kube-controller-manager.service

添加

--cluster-signing-duration=876000h0m0s \

注意:尽管我们写的100年,但是Kubelet 最长好像5年有效期。

[root@k8s2-master03 ~]# cat /usr/lib/systemd/system/kube-controller-manager.service

[Unit]

Description=Kubernetes Controller Manager

Documentation=https://github.com/kubernetes/kubernetes

After=network.target

[Service]

ExecStart=/usr/local/bin/kube-controller-manager \

--v=2 \

--logtostderr=true \

--address=127.0.0.1 \

--root-ca-file=/etc/kubernetes/pki/ca.pem \

--cluster-signing-cert-file=/etc/kubernetes/pki/ca.pem \

--cluster-signing-key-file=/etc/kubernetes/pki/ca-key.pem \

--service-account-private-key-file=/etc/kubernetes/pki/sa.key \

--kubeconfig=/etc/kubernetes/controller-manager.kubeconfig \

--leader-elect=true \

--cluster-signing-duration=876000h0m0s \

--use-service-account-credentials=true \

--node-monitor-grace-period=40s \

--node-monitor-period=5s \

--pod-eviction-timeout=2m0s \

--controllers=*,bootstrapsigner,tokencleaner \

--allocate-node-cidrs=true \

--cluster-cidr=172.16.0.0/12 \

--requestheader-client-ca-file=/etc/kubernetes/pki/front-proxy-ca.pem \

--node-cidr-mask-size=24

Restart=always

RestartSec=10s

[Install]

WantedBy=multi-user.target

重启查看状态

systemctl daemon-reload

systemctl restart kube-controller-manager

systemctl status kube-controller-manager

11.3 Kubernetes 指定 TLS 密码

由 etcd、kube-apiserver 和 kubelet 选取的缺省密码套件包含的密码 ECDHE-RSA-DES-CBC3-SHA 太弱,可能存在安全性漏洞问题。为避免出现问题,您可配置 etcd、kube-apiserver 和 kubelet 以指定为 IBM® Cloud Private 集群提供强大保护的密码套件

添加下面

--tls-cipher-suites=TLS_ECDHE_RSA_WITH_AES_128_GCM_SHA256,TLS_ECDHE_RSA_WITH_AES_256_GCM_SHA384 --image-pull-progress-deadline=30m

- TLS_ECDHE_RSA_WITH_AES_128_GCM_SHA256,TLS_ECDHE_RSA_WITH_AES_256_GCM_SHA384

- TLS密码有许多已知的漏洞和弱点,这样的防护不是很安全,kubernetes本身支持很多类型的加密方式和加密套件,可以加强保护程序安全

- 影响不能支持现代加密密码的Kubelet客户端将无法连接到KubeletAPI

- 设置–image-pull-progress-deadline=30, 配置镜像拉取超时。默认值时1分,对于大镜像拉取需要适量增大超时时间

vim /etc/systemd/system/kubelet.service.d/10-kubelet.conf

[Service]

Environment="KUBELET_KUBECONFIG_ARGS=--bootstrap-kubeconfig=/etc/kubernetes/bootstrap-kubelet.kubeconfig --kubeconfig=/etc/kubernetes/kubelet.kubeconfig"

Environment="KUBELET_SYSTEM_ARGS=--network-plugin=cni --cni-conf-dir=/etc/cni/net.d --cni-bin-dir=/opt/cni/bin"

Environment="KUBELET_CONFIG_ARGS=--config=/etc/kubernetes/kubelet-conf.yml --pod-infra-container-image=registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.5"

Environment="KUBELET_EXTRA_ARGS=--node-labels=node.kubernetes.io/node='' --tls-cipher-suites=TLS_ECDHE_RSA_WITH_AES_128_GCM_SHA256,TLS_ECDHE_RSA_WITH_AES_256_GCM_SHA384 --image-pull-progress-deadline=30m"

ExecStart=

ExecStart=/usr/local/bin/kubelet $KUBELET_KUBECONFIG_ARGS $KUBELET_CONFIG_ARGS $KUBELET_SYSTEM_ARGS $KUBELET_EXTRA_ARGS

vim /etc/kubernetes/kubelet-conf.yml

rotateServerCertificates: true

allowedUnsafeSysctls:

- "net.core*"

- "net.ipv4.*"

kubeReserved:

cpu: "1"

memory: 1Gi

ephemeral-storage: 10Gi

systemReserved:

cpu: "1"

memory: 1Gi

ephemeral-storage: 10Gi

- ubernetes 的节点可以按照节点的资源容量进行调度,默认情况下 Pod 能够使用节点全部可用容量。这样就会造成一个问题,因为节点自己通常运行了不少驱动 OS 和 Kubernetes 的系统守护进程。除非为这些系统守护进程留出资源,否则它们将与 Pod 争夺资源并导致节点资源短缺问题

systemctl daemon-reload

systemctl restart kubelet

systemctl status kubelet

提示如果有报错可以使用journalctl -u kubelet -f 查看日志

因为我是测试环境,给的配置就比较小,生产可以大点。

allowedUnsafeSysctls:

- "net.core*"

- "net.ipv4.*"

kubeReserved:

cpu: "200m"

memory: 100Mi

ephemeral-storage: 2Gi

systemReserved:

cpu: "100m"

memory: 100Mi

ephemeral-storage: 2Gi

给master 节点 ROLES 打标签

[root@k8s2-master01 containers]# kubectl label node k8s2-master01 node-role.kubernetes.io/master=''

[root@k8s2-master01 containers]# kubectl get node

NAME STATUS ROLES AGE VERSION

k8s2-master01 Ready master 163m v1.22.0

k8s2-master02 Ready <none> 163m v1.22.0

k8s2-master03 Ready <none> 163m v1.22.0

k8s2-node01 Ready <none> 163m v1.22.0

k8s2-node02 Ready <none> 163m v1.22.0

12 安装总结:

1、 kubeadm

2、 二进制

3、 自动化安装

a) Ansible

i. Master节点安装不需要写自动化。

ii. 添加Node节点,playbook。

4、 安装需要注意的细节

a) 上面的细节配置

b) 生产环境中etcd一定要和系统盘分开,一定要用ssd硬盘。

c) Docker数据盘也要和系统盘分开,有条件的话可以使用ssd硬盘